Software Delivery Guide

I use the term “software delivery” to indicate the steps from a developer finishing work on a new feature, to that feature being used in production. In my younger days, the time for this would typically measured in months. One of the great advances in software development in the last two decades has been cutting this time, sometimes down to minutes. This means that features are used to generate value more quickly, both increasing the return on investment to build that feature, and providing fast feedback for future development.

There are many initiatives that have contributed to this change. The mindset of agile software development, has made the case for short cycle times and fast feedback. The Extreme Programming practice of Continuous Integration encourages all members of a development team to integrate their work daily, instead of developing features in isolation for days or weeks. The Devops movement encourages software developers, operations staff, and everyone else involved in delivery to work together - avoiding hand-offs that add delays and brittleness. Infrastructure-As-Code takes advantage of our cloud age ability to rapidly deploy and provision new servers. Pulling this all together is the practice of Continuous Delivery: which always keeps the software product in a releasable state, allowing fast release of features and rapid response to any failures.

A guide to material on martinfowler.com about software delivery and devops.

Continuous Integration

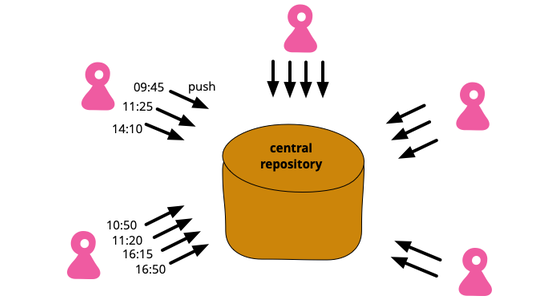

Continuous Integration is a software development practice where each member of a team merges their changes into a codebase together with their colleagues changes at least daily. Each of these integrations is verified by an automated build (including test) to detect integration errors as quickly as possible. Teams find that this approach reduces the risk of delivery delays, reduces the effort of integration, and enables practices that foster a healthy codebase for rapid enhancement with new features.

Dev Ops Culture

Agile software development has broken down some of the silos between requirements analysis, testing and development. Deployment, operations and maintenance are other activities which have suffered a similar separation from the rest of the software development process. The DevOps movement is aimed at removing these silos and encouraging collaboration between development and operations.

Continuous Delivery

Continuous Delivery is a software development discipline where you build software in such a way that the software can be released to production at any time.

You’re doing continuous delivery when:

- Your software is deployable throughout its lifecycle

- Your team prioritizes keeping the software deployable over working on new features

- Anybody can get fast, automated feedback on the production readiness of their systems any time somebody makes a change to them

- You can perform push-button deployments of any version of the software to any environment on demand

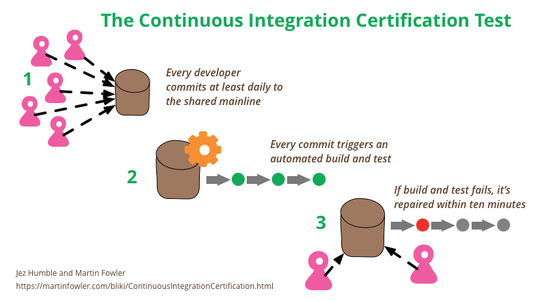

Continuous Integration Certification

Continuous Integration is a popular technique in software development. At conferences many developers talk about how they use it, and Continuous Integration tools are common in most development organizations. But we all know that any decent technique needs a certification program — and fortunately one does exist. Developed by one of the foremost experts in continuous delivery and devops, it’s known for being remarkably rapid to administer, yet very insightful for its results. Although it’s quite mature, it isn’t as well known as it should be, so as a fan of the technique I think it’s important for me to share this certification program with my readers. Are you ready to be certified for Continuous Integration? And how will you deal with the shocking truth that taking the test will reveal?

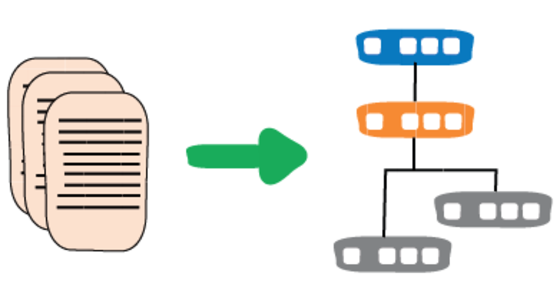

Deployment Pipeline

One of the challenges of an automated build and test environment is you want your build to be fast, so that you can get fast feedback, but comprehensive tests take a long time to run. A deployment pipeline is a way to deal with this by breaking up your build into stages. Each stage provides increasing confidence, usually at the cost of extra time. Early stages can find most problems yielding faster feedback, while later stages provide slower and more through probing. Deployment pipelines are a central part of ContinuousDelivery.

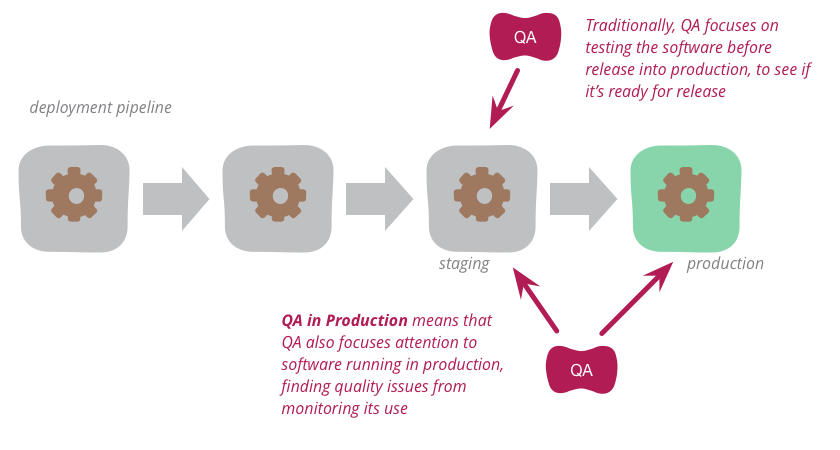

QA in Production

Traditionally, QA focuses on testing the software before release into production to see if it's ready for such release. But increasingly, modern QA organizations are also focusing attention onto the software running in production. By analyzing logs and other monitoring tools, they find quality problems to highlight to the development organization. This approach works particularly well with organizations that use continuous delivery to put new versions of the software into production rapidly and reliably.

Continuous Delivery

The definitive book on Continuous Delivery, which outlines the practices needed to bring code into production rapidly and safely. The key aspects are collaboration between everyone involved in the release process and automating as many aspects of that process as you can. The book goes through the foundations of configuration management, automated testing, and continuous integration - on which it shows how to build deployment pipelines to take integrated, tested code live. The book details the delivery ecosystem, managing infrastructure, environments and data.

Talk: Continuous Delivery

Continuous Delivery is now becoming a central practice for effective software delivery organizations. This talk explains the essentials of how it works, the role of a deployment pipeline, the difference between continuous delivery and continuous deployment, and some vital ingredients. It also covers the three main benefits of Continuous Delivery: reducing deployment risk, believable progress, and user feedback.

Useful Patterns

While Continuous Delivery is an essential practice for a effective software development organization, it takes time to learn. Teams need to learn new patterns to put into their code base that enable the automation and observability needs. While I haven't done a comprehensive list of such patters, I've collected several important ones on this site

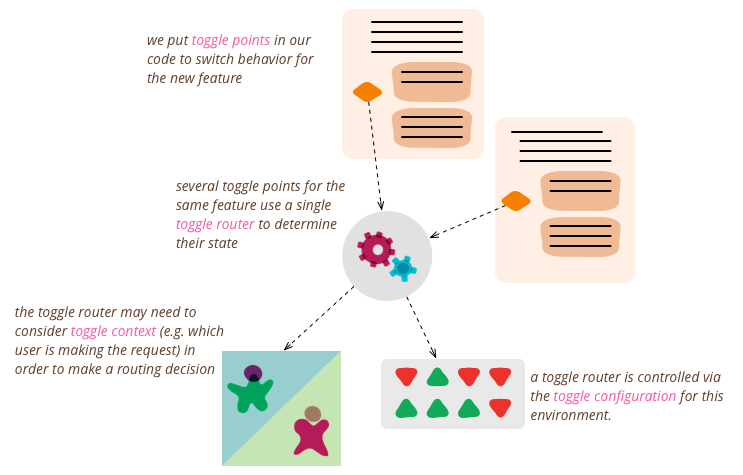

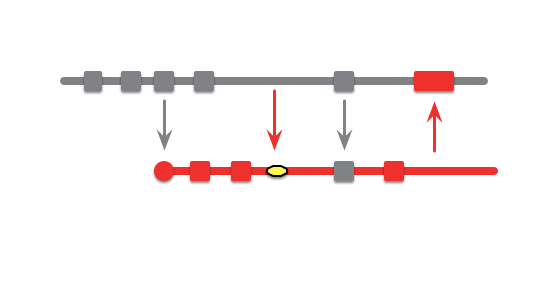

Feature Toggles (aka Feature Flags)

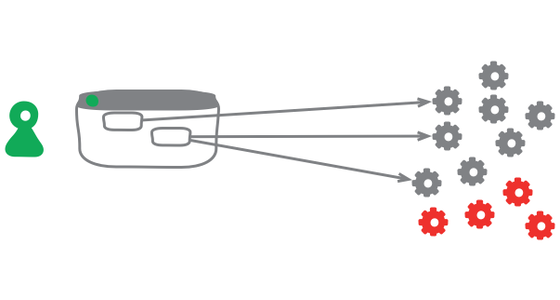

Feature Toggles (often also refered to as Feature Flags) are a powerful technique, allowing teams to modify system behavior without changing code. They fall into various usage categories, and it's important to take that categorization into account when implementing and managing toggles. Toggles introduce complexity. We can keep that complexity in check by using smart toggle implementation practices and appropriate tools to manage our toggle configuration, but we should also aim to constrain the number of toggles in our system.

Patterns for Managing Source Code Branches

Modern source-control systems provide powerful tools that make it easy to create branches in source code. But eventually these branches have to be merged back together, and many teams spend an inordinate amount of time coping with their tangled thicket of branches. There are several patterns that can allow teams to use branching effectively, concentrating around integrating the work of multiple developers and organizing the path to production releases. The over-arching theme is that branches should be integrated frequently and efforts focused on a healthy mainline that can be deployed into production with minimal effort.

Blue Green Deployment

One of the goals that my colleagues and I urge on our clients is that of a completely automated deployment process. Automating your deployment helps reduce the frictions and delays that crop up in between getting the software “done” and getting it to realize its value. Dave Farley and Jez Humble are finishing up a book on this topic - Continuous Delivery. It builds upon many of the ideas that are commonly associated with Continuous Integration, driving more towards this ability to rapidly put software into production and get it doing something. Their section on blue-green deployment caught my eye as one of those techniques that's underused, so I thought I'd give a brief overview of it here.

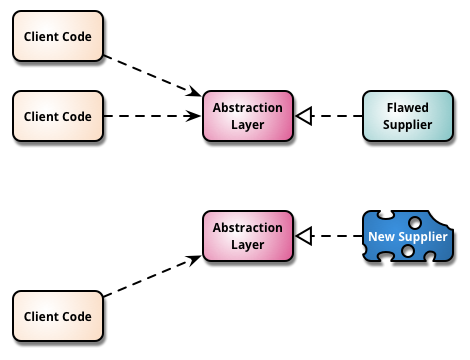

Branch By Abstraction

“Branch by Abstraction” is a technique for making a large-scale change to a software system in gradual way that allows you to release the system regularly while the change is still in-progress.

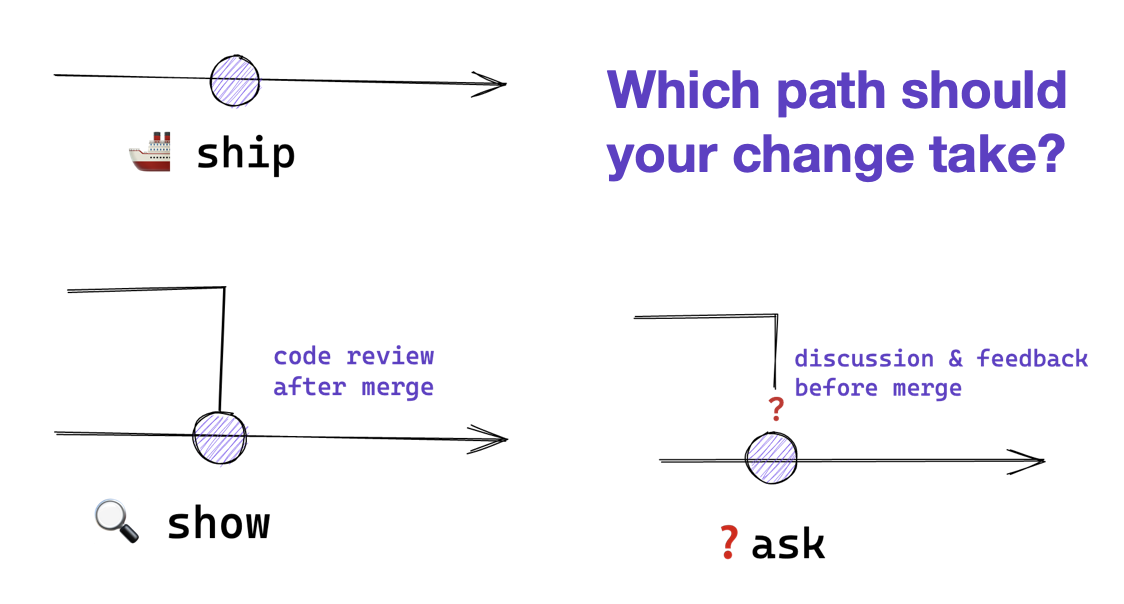

Ship / Show / Ask

Ship/Show/Ask is a branching strategy that combines the features of Pull Requests with the ability to keep shipping changes. Changes are categorized as either Ship (merge into mainline without review), Show (open a pull request for review, but merge into mainline immediately), or Ask (open a pull request for discussion before merging).

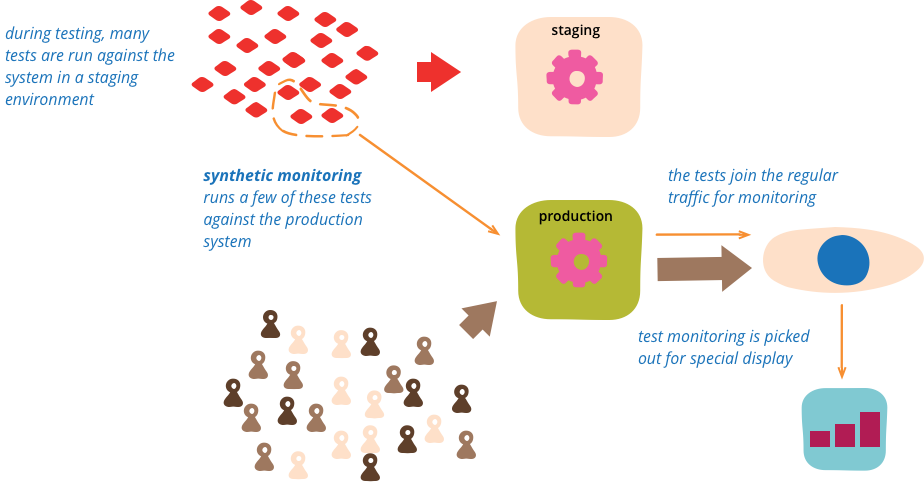

Synthetic Monitoring

Synthetic monitoring (also called semantic monitoring ) runs a subset of an application's automated tests against the live production system on a regular basis. The results are pushed into the monitoring service, which triggers alerts in case of failures. This technique combines automated testing with monitoring in order to detect failing business requirements in production.

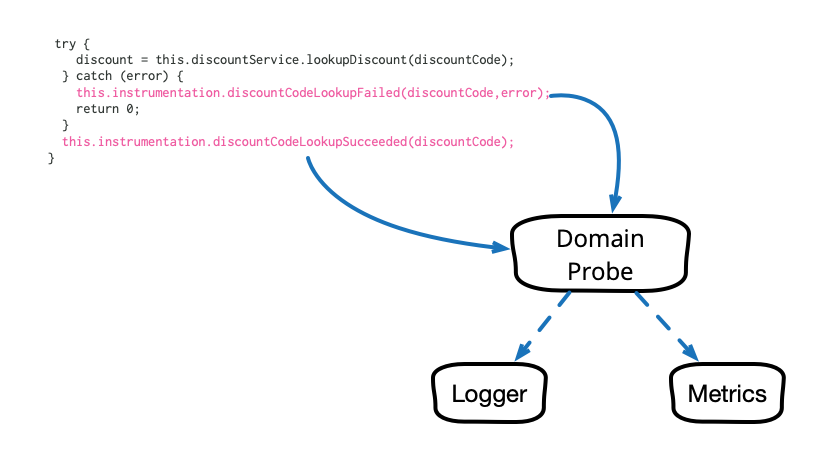

Domain-Oriented Observability

Observability in our software systems has always been valuable and has become even more so in this era of cloud and microservices. However, the observability we add to our systems tends to be rather low level and technical in nature, and too often it seems to require littering our codebase with crufty, verbose calls to various logging, instrumentation, and analytics frameworks. This article describes a pattern that cleans up this mess and allows us to add business-relevant observability in a clean, testable way.

Canary Release

Canary release is a technique to reduce the risk of introducing a new software version in production by slowly rolling out the change to a small subset of users before rolling it out to the entire infrastructure and making it available to everybody.

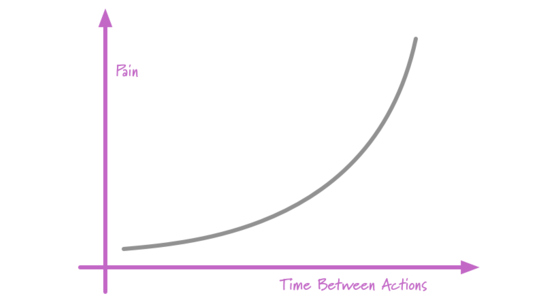

Frequency Reduces Difficulty

One of my favorite soundbites is: if it hurts, do it more often. It has the happy property of seeming nonsensical on the surface, but yielding some valuable meaning when you dig deeper

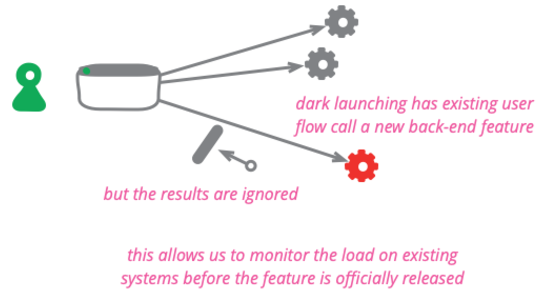

Dark Launching

Dark launching a feature means taking a new or changed back-end behavior and calling it from existing users without the users being able to tell it's being called. It's done to assess the additional load and performance impacts upon the system before making a public announcement of the new capability.

Keystone Interface

Software development teams find life can be much easier if they integrate their work as often as they can. They also find it valuable to release frequently into production. But teams don't want to expose half-developed features to their users. A useful technique to deal with this tension is to build all the back-end code, integrate, but don't build the user-interface. The feature can be integrated and tested, but the UI is held back until the end until, like a keystone, it's added to complete the feature, revealing it to the users.

Incremental Migration

Like any profession, software development has it's share of oft-forgotten activities that are usually ignored but have a habit of biting back at just the wrong moment. One of these is data migration.

Reproducible Build

One of the prevailing assumptions that fans of Continuous Integration have is that builds should be reproducible. By this we mean that at any point you should be able to take some older version of the system that you are working on and build it from source in exactly the same way as you did then.

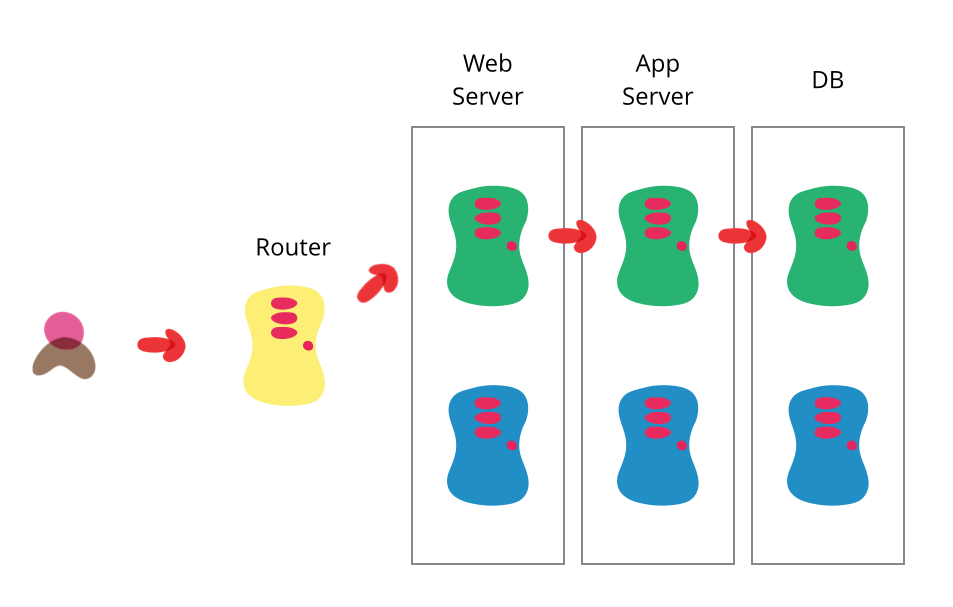

Catastrophic Failover

One of the oft advertised features of modern application servers is that they provide failover in a cluster. Clustering improves the reliability of your application, if one of your servers goes down, you have some more up to server your customers. Failover can add even more reliability, if a server goes down in the middle of a interaction the cluster can move that interaction to another server.

However this can be a problem.

Infrastructure in the Cloud Age

A central property of Continuous Delivery is the automation of the build process for an application, allowing the system to be deployed into any environment quickly. But the value of this ability is limited if it's hard to create and modify computing infrastructure. The rise of cloud computing has opened up a world where we can create and provision new servers from a command-line invocation. Using Infrastructure-As-Code to take advantage of this shift from the Iron Age of infrastructure into this new Cloud Age both enables continuous delivery, and also applies the principles of continuous delivery to how we think about building infrastructure.

Infrastructure As Code

Infrastructure as code is the approach to defining computing and network infrastructure through source code that can then be treated just like any software system. Such code can be kept in source control to allow auditability and ReproducibleBuilds, subject to testing practices, and the full discipline of ContinuousDelivery. It's an approach that's been used over the last decade to deal with growing CloudComputing platforms and will become the dominant way to handle computing infrastructure in the next.

Configuration Synchronization

Automated configuration tools (such as CFEngine, Puppet, or Chef) allow you to avoid SnowflakeServers by providing recipes to describe the configuration of elements of a server. Configuration synchronization continually applies these specifications, either on a regular schedule or when it changes, to server instances throughout their lifetime. If someone makes a change to a server outside the tool, it will be reverted to the centrally specified configuration the next time the server is synchronized. If some configuration change is needed, it's made in the configuration specification (recipes, manifests, or whatever the particular configuration tool calls it), and is then applied to all relevant servers across the infrastructure.

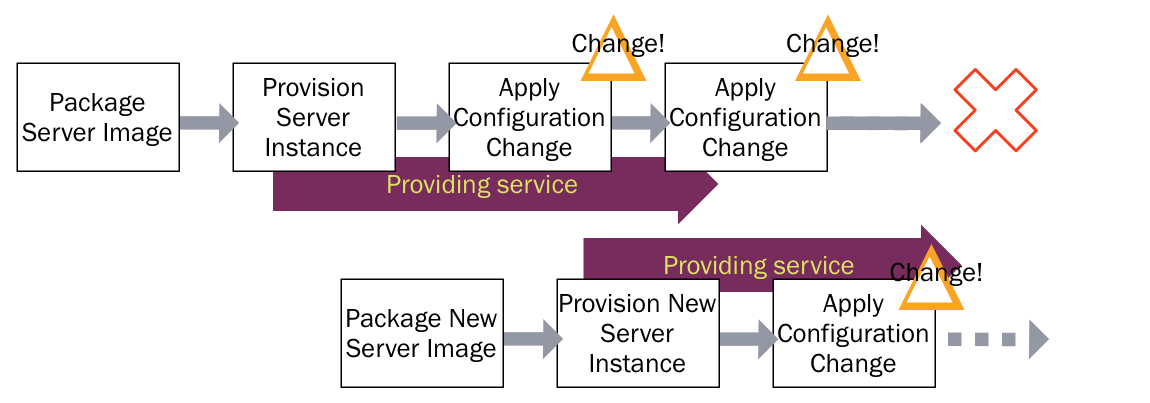

Immutable Server

Automated configuration tools (such as CFEngine, Puppet, or Chef) allow you to specify how servers should be configured, and bring new and existing machines into compliance. This helps to avoid the problem of fragile SnowflakeServers. Such tools can create PhoenixServers that can be torn down and rebuilt at will. An Immutable Server is the logical conclusion of this approach, a server that once deployed, is never modified, merely replaced with a new updated instance.

Cloud Computing

“Cloud” has become a very over-hyped term over the last few years. One of the characteristics of over-hyped words is that they have little or no definition to them (yes NosqlDefinition I'm looking at you).

As it turns out there is an excellent definition of cloud computing available, from none other that NIST. It's available by a wonderfully short and easy to understand standards document (no, I'm not kidding).

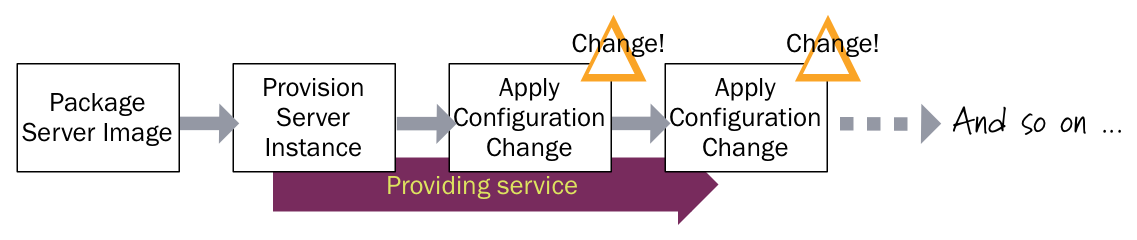

Snowflake Server

It can be finicky business to keep a production server running. You have to ensure the operating system and any other dependent software is properly patched to keep it up to date. Hosted applications need to be upgraded regularly. Configuration changes are regularly needed to tweak the environment so that it runs efficiently and communicates properly with other systems. This requires some mix of command-line invocations, jumping between GUI screens, and editing text files.

The result is a unique snowflake - good for a ski resort, bad for a data center.

Phoenix Server

One day I had this fantasy of starting a certification service for operations. The certification assessment would consist of a colleague and I turning up at the corporate data center and setting about critical production servers with a baseball bat, a chainsaw, and a water pistol. The assessment would be based on how long it would take for the operations team to get all the applications up and running again.