during: 2025

I've been kidnapped by Robert Caro

His books are huge, but I can't put them down.

Exploring Generative AI

Generative AI and particularly LLMs (Large Language Models) have exploded into the public consciousness. Like many software developers Birgitta is intrigued by the possibilities, but unsure what exactly it will mean for our profession in the long run. She has taken on a role in Thoughtworks to coordinate our work on how this technology will affect software delivery practices. On this page she posts a series of memos to describe what she and our colleagues are learning and thinking.

Emerging Patterns in Building GenAI Products

As we move software products using generative AI technology from proof-of-concepts into production systems, we are uncovering a range of common patterns. Evals play a central role in ensuring that these non-deterministic systems are operating within sensible boundaries. Large Language Models need enhancement to provide information beyond a generic and static training set. Most of the time we can do this with Retrieval Augmented Generation (RAG), although the basic RAG approach requires several patterns to overcome its limitations. When RAG isn't enough, Fine Tuning becomes worthwhile.

The DeepSeek Series: A Technical Overview

The appearance of DeepSeek Large-Language Models has caused a lot of discussion and angst since their latest versions appeared at the beginning of 2025. But much of the value of DeepSeek's work comes from the papers they have published over the last year. This article provides an overview of these papers, highlighting three main arcs in this research: a focus on improving cost and memory efficiency, the use of HPC Co-Design to train large models on limited hardware, and the development of emergent reasoning from large-scale reinforcement learning

Forest And Desert

The Forest and the Desert is a metaphor for thinking about software development processes, developed by Beth Andres-Beck and hir father Kent Beck. It posits that two communities of software developers have great difficulty communicating to each other because they live in very different contexts, so advice that applies to one sounds like nonsense to the other.

Where Is SW Development Going?

I was on a panel at goto Copenhagen with Holly Cummings, Trisha Gee, Dave Farley, and Daniel Terhorst-North. We discussed the current state of software development and where it was heading. Given the timing, there was much discussion about the role AI would play in our profession's future.

Growing the Development Forest

Luca Rossi hosts a podcast (and newsletter) called Refactoring, so it's obvious that we have some interests in common. The tile comes from me leaning heavily on Beth Anders-Beck and Kent Beck's metaphor of The Forest and The Desert. We talk about the impact of AI on software development, the metaphor of technical debt, and the current state of agile software development.

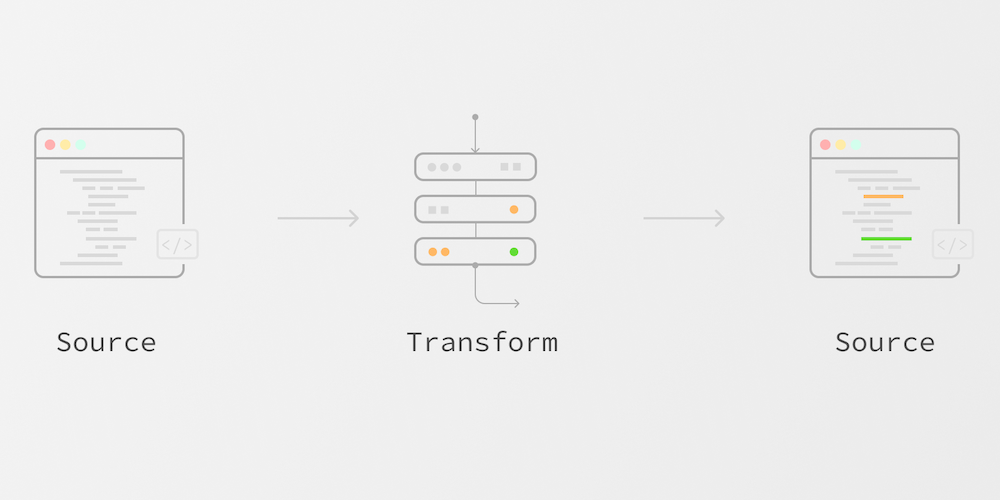

Refactoring with Codemods to Automate API Changes

Refactoring is something developers do all the time—making code easier to understand, maintain, and extend. While IDEs can handle simple refactorings with just a few keystrokes, things get tricky when you need to apply changes across large or distributed codebases, especially those you don’t fully control. That’s where codemods come in. By using Abstract Syntax Trees (AST), codemods allow you to automate large-scale code changes with precision and minimal effort, making them especially useful when dealing with breaking API changes. This article looks at how codemods can help manage these challenges, with practical examples like removing feature toggles or refactoring complex React components. We’ll also discuss potential pitfalls and how to avoid them when using codemods at scale.